Just to underline: here's how you delete the recordings Siri saves on Apple servers. None of these settings tell you they do that until after you uncheck them.https://t.co/Bn9gDwlvjq pic.twitter.com/5ztGRQgsYh

— Dieter Bohn (@backlon) August 2, 2019

Apple’s handling of your Siri voice recordings is a really clear sign that its strident privacy stance has given the company a blind spot: when it DOES collect your data, it isn’t as good as everybody else at giving you controls for seeing and deleting it https://t.co/9p6zmSkein pic.twitter.com/M98BNLzXFP

— Dieter Bohn (@backlon) August 2, 2019

Deleting your Siri voice recordings from Apple’s servers is confusing — here’s how https://t.co/MfdhmAguo0 pic.twitter.com/NS9AafKfMX

— The Verge (@verge) August 2, 2019

Good write up here ?? https://t.co/5e6PmV1CAj pic.twitter.com/z6PbLvFlnC

— Alaric Aloor ??⚽️? (@AlaricAloor) August 2, 2019

Here's @backlon on Apple's Siri privacy situation -- Apple retains the right to basically keep recordings forever, and today's rushed-out announcement is only about humans grading recordings -- not *not saving recordings of you in the first place* https://t.co/NvOpEfCry9

— nilay patel (@reckless) August 2, 2019

애플 서버에서 시리로 녹음된 음성 지우는 법

— 데드캣 (@deadcatssociety) August 2, 2019

최근 시리가 무작위로 음성을 녹음해 연구에 사용된걸로 밝혀졌는데 (심지어 성관계 소리도 녹음되었다고) 애플 서버에서 음성을 지우는 법https://t.co/9L14TRZ4gy pic.twitter.com/3yfnS6bRwR

애플과 구글은 일시적으로 시리와 OK 구글의 질문 경청을 중단합니다. https://t.co/vYjuvbk3KP

— editoy (@editoy) August 3, 2019

• 사람들이 Siri 쿼리를 듣는 것을 멈추게 한 애플의 결정은 지난주 녹음된 내용을 검토하는 계약자가 사적인 토론과 성행위를 듣는다는 보고서 뒤를 따른 것입니다.

“Some voice assistants are recording people having sex and then random humans listen to those recordings” https://t.co/JaaV9BvwN3

— Health Tech Law (@HealthPI) August 3, 2019

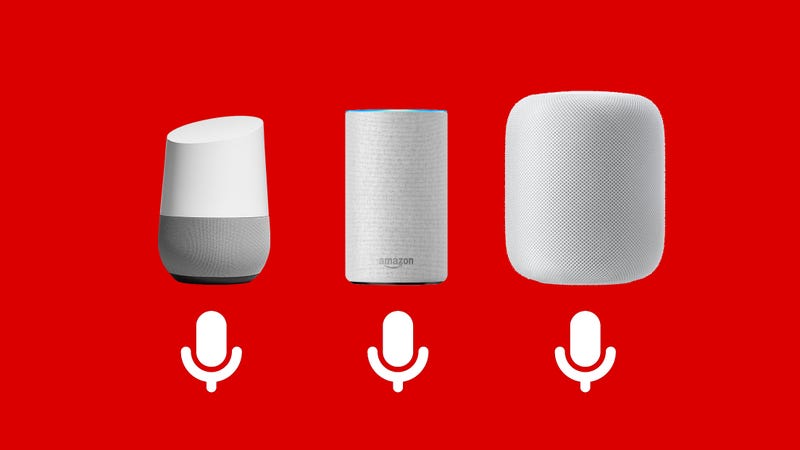

Somehow putting Alexa in my home feels 3000% more disconcerting than any other smart assistant (ok except Facebook Portal) https://t.co/1Hi8WPJyI3

— Owen Williams ⚡ (@ow) August 2, 2019

NOPE! So If You Are Having Sex Be VERY LOUD And Enthusiast! Give “Them,” Something To Listen To! Just Saying! ☮️❤️? https://t.co/RtqC4UIp8r

— T Bohn (@TheTBohn) August 2, 2019

Deleting your Siri voice recordings from Apple’s servers is confusing — here’s how https://t.co/MfdhmzYSZq pic.twitter.com/Io90cASuir

— The Verge (@verge) August 3, 2019

How to delete your Siri voice recordings (via @verge) https://t.co/KPYp6D7FyC

— IPVanish (@IPVanish) August 2, 2019

프라이버시를 사실상 하나의 세일즈 포인트로 삼고 있는 애플인데 Siri나 받아쓰기의 음성 데이터 수집을 멈출 명시적인 옵션이나 사이트가 존재하지 않는 것으로 보여. https://t.co/dvrUpZc9RI

— 푸른곰 (@purengom) August 2, 2019

Let's face it, Google and Apple are probably listening to everyone, all of the time... along with many phones. @CommonsCMS https://t.co/L6Kr4aTyjf

— Dr Matt Prescott (@mattprescott) August 2, 2019

Apple & Google are temporarily stopping workers listening to voice recordings from #smart speakers & #virtual #assistants.

— Jason R.C. Nurse (@jasonnurse) August 2, 2019

It follows a report that Apple's 3rd-party contractors heard people having sex and discussing private #medical info. https://t.co/WepAO36xoF #privacy #IoT

Apple and Google stop workers playing back voice recordings https://t.co/UPQXxx9HNV#Privacy #pii #cookies #marketing #eu #gpdr #surveillance #tracking #monitoring #profiling #persona #UserData #righttobeforgetten pic.twitter.com/2dhVcqKj2f

— Rich Tehrani (@rtehrani) August 3, 2019

‘불 좀 꺼주세요’https://t.co/8PTdm5EJLb

— the oranckay (@oranckay) August 2, 2019

(https://t.co/SBj1S1hpgW)

.png)