UCSF neuroscientists just reported new progress in ongoing efforts to one day restore communication to people with speech loss due to paralysis. #science https://t.co/No5XrmTma2

— UC San Francisco (@UCSF) July 30, 2019

“Doctors have turned the brain signals for speech into written sentences in a research project that aims to transform how patients with severe disabilities communicate in the future. https://t.co/xSU9GUV2Bs

— Belinda Barnet (@manjusrii) July 31, 2019

There's been much work on #AI speech recognition. But the implications for direct #brain to speech decoding, for patients w/ paralysis, stroke or locked in, is remarkable. =Chapter 2 @NeurosurgeryUCSF @UCSF pioneering efforts https://t.co/XQ21fB2eym @NatureComms @UCSFMedicine pic.twitter.com/VUT2UuNxdG

— Eric Topol (@EricTopol) July 30, 2019

seems like a good time for a reminder that Facebook has already refused to rule out using your brain activity for advertising purposes https://t.co/8o5lDGIvWA https://t.co/I8sG3KDhYi

— Sam Biddle (@samfbiddle) July 30, 2019

Imagined Input for #AR Glasses: BCI could offer hands-free communication without saying a word. Check out the latest entry in our Inside @facebook Reality Labs blog series to learn more // https://t.co/vF9YQR6hx9 pic.twitter.com/VcwhDtogJL

— TechAtFacebook (@techatfacebook) July 30, 2019

Today we’re sharing an update on our work to build a non-invasive wearable device that lets people type just by imagining what they want to say. Our progress shows real potential in how future inputs and interactions with AR glasses could one day look. https://t.co/ilk192GwAR

— Boz (@boztank) July 30, 2019

Neuroscientists decode brain speech signals into actual sentences - https://t.co/zo64LCp4tq good news for the paralysed; not so sure for the rest of us...

— Glyn Moody (@glynmoody) July 30, 2019

Neuroscientists decode brain speech signals into written text.

— David Ojcius (@ojcius) July 31, 2019

Study is the 1st to demonstrate that specific words can be extracted from brain activity & converted into text rapidly enough to keep pace with natural conversation.https://t.co/9gRe3yWuXZ pic.twitter.com/b5wetcpnga

Lots of work has been done to decode speech from brain signals, this group is constraining the problem by also predicting it based on what we hear and sense, leading to superior results: https://t.co/wHskfFx3Iy

— Shahin Farshchi (@Farshchi) July 30, 2019

this sounds like a great way to show you ads for every damn thing that pops into your mind https://t.co/fv1sdaUyq2

— Owen Williams ⚡ (@ow) July 31, 2019

Reactions to: https://t.co/RWmAyEpIcu hmm...

— Stasys Bielinis (@Staska) July 31, 2019

"Understanding commands" is absolutely not the same as "Reading thoughts"

Does not warrant: Facebook! Targeting! Advertising! Be very afraid!

My impression from this: https://t.co/L9jfvPBMyd is:

61% to 76% accurate lie detector.

Facebook's brain-interface project takes it into "human subjects" research as it finances invasive (and non invasive) brain research in academia.https://t.co/6qkFoohBFX

— Antonio Regalado (@antonioregalado) July 30, 2019

NEW: Facebook is trying to read people’s thoughts, and no one’s ready for the consequences. https://t.co/2G1aMwU9Kt

— MIT Technology Review (@techreview) July 30, 2019

A Facebook-funded study has resulted in an early-stage brain-machine interface that decodes speech—and even thoughts—with high accuracy. https://t.co/wRDfVJlFIL

— IEEE Spectrum (@IEEESpectrum) July 30, 2019

Facebook gets closer to making its brain-reading computer a reality https://t.co/i6kugDkmwE

— Neuralink Feed (@Neuralink1) July 30, 2019

2019: Millions unwittingly give FaceApp rights to all their photos.

— Sterling Okura (@SterlingOkura) July 30, 2019

2021: Millions unwittingly give FB rights to all of their thoughts. ?https://t.co/YqnmKOaIkO

Brain-computer interfaces are developing faster than the policy debate around them. @CaseyNewton explains what's possible, and what shouldn't be in The @verge https://t.co/3sbqIA4Sdq @RobMcCargow @Happicamp #technology #braincomputer #wearables

— Gareth Willmer (@GWillmer_PwC) July 31, 2019

Brain-computer interfaces are developing faster than the policy debate around them https://t.co/5P1xdP88Cb pic.twitter.com/sw23ytETso

— The Verge (@verge) July 31, 2019

Brain-computer interfaces are developing faster than the policy debate around them - The Verge https://t.co/B61I7OtcoS

— Neuralink Feed (@Neuralink1) July 31, 2019

Brain-computer interfaces are developing faster than the policy debate around them https://t.co/mcaPf4MgNI via @Verge

— Gerd Leonhard (@gleonhard) July 31, 2019

Facebook's 'brain-computer interface' could let you type with your mind https://t.co/mm4qHPJZ8R # via @DigitalTrends

— Chuck Brooks (@ChuckDBrooks) July 31, 2019

Great. Let's allow Zuckerberg into our minds. What could possibly go wrong? https://t.co/3rskvo81Aj

— Jasper Hamill (@jasperhamill) July 31, 2019

There's been much work on #AI speech recognition. But the implications for direct #brain to speech decoding, for patients w/ paralysis, stroke or locked in, is remarkable. =Chapter 2 @NeurosurgeryUCSF @UCSF pioneering efforts https://t.co/XQ21fB2eym @NatureComms @UCSFMedicine pic.twitter.com/VUT2UuNxdG

— Eric Topol (@EricTopol) July 30, 2019

Facebookの共同研究先からの論文。埋め込み電極(ECoG)で皮質の活動を読み取り会話を生成することに成功。

— Kazutaka Okuda(奥田一貴) (@Kztk_2) July 31, 2019

FBは以前のカンファレンスで非侵襲を強調していましたが、現実は難しい。https://t.co/V5M9KEwGTq

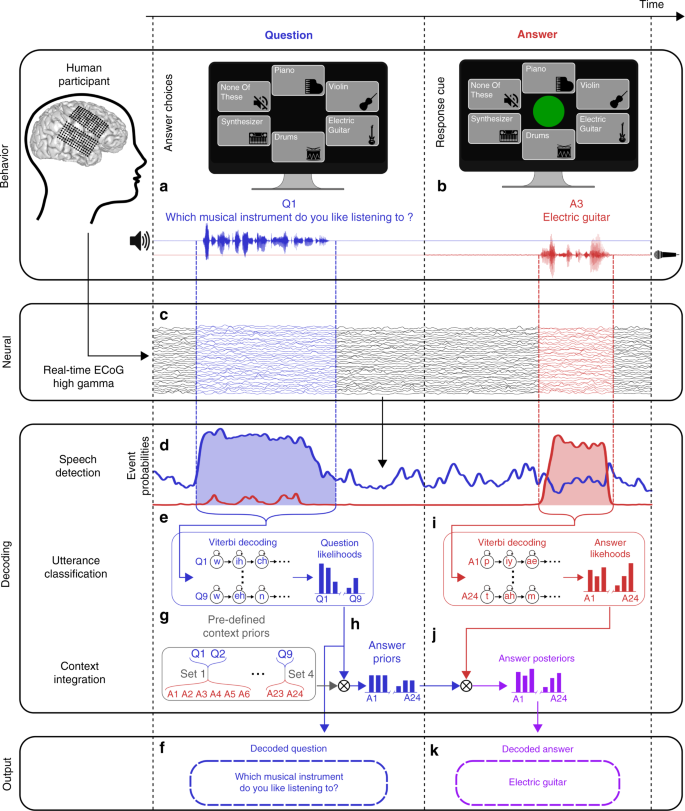

https://t.co/TfpxhLCLAs Real-time decoding of question-and-answer speech dialogue using human cortical activity by David Moses, Matthew Leonard, Joseph Makin & Edward Chang

— ASSC (@theASSC) July 31, 2019

Real-time decoding of question-and-answer speech dialogue using human cortical activity by Chang lab: https://t.co/IgzK0CYbpo

— Mick Crosse (@mick_crosse) July 30, 2019

Lots of work has been done to decode speech from brain signals, this group is constraining the problem by also predicting it based on what we hear and sense, leading to superior results: https://t.co/wHskfFx3Iy

— Shahin Farshchi (@Farshchi) July 30, 2019

New academic study:

— Brian Roemmele (@BrianRoemmele) July 30, 2019

"Real-time decoding of question-and-answer speech dialogue using human cortical activity”—@Facebook

As I have been saying for quite sometime the only thing you can read is the silent inner-voice of the Phonological Loop.#VoiceFirsthttps://t.co/pp5qjF4LYK

.png)