I read the information Apple put out yesterday and I'm concerned. I think this is the wrong approach and a setback for people's privacy all over the world.

— Will Cathcart (@wcathcart) August 6, 2021

People have asked if we'll adopt this system for WhatsApp. The answer is no.

"Child pornography is so repulsive a crime that those entrusted to root it out may, in their zeal, be tempted to bend or even break the rules. If they do so, however, they endanger the freedom of all of us." (US v. Coreas) https://t.co/ckDcKaVnTT

— Sam Adler-Bell (@SamAdlerBell) August 6, 2021

given that CSAM scanning is only enabled for users who opt into icloud photo backups, i'm guessing apple would have built it into icloud if they could. they can't because of end-to-end encryption. https://t.co/gNh5ps6DbN

— yan (@bcrypt) August 6, 2021

Re-reading all of Apple's compromises in China to cater to the government there is interesting in light of the company making a system to scan for images stored on phones https://t.co/aeC2wd4nlq pic.twitter.com/cbCDfZPbJM

— Joseph Cox (@josephfcox) August 6, 2021

Update: These initial expressions of hesitance from Whatsapp are at least a small sign that there is some fight left in corporate e2e providers to reject mandates of on-device mass surveillance. Some reasons for optimism there.https://t.co/I0Qahy7SYn

— Sarah Jamie Lewis (@SarahJamieLewis) August 6, 2021

We previously obtained a sample of malware deployed in Xinjiang that scans phones for 70,000 specific files related to politics, Uighurs, etc. Why wouldn't Chinese authorities try to get Apple to leverage its new child abuse system to instead fulfil this https://t.co/NZxIJIuOC8

— Joseph Cox (@josephfcox) August 6, 2021

Latest reaction to Apple's child safety plans:

— Tim Bradshaw (@tim) August 6, 2021

EU?

India ?

UK ?

rest of Big Tech ?

WhatsApp ?https://t.co/cmVsQ0N0bb

Critics of Apple's new plan don't see a better-engineered system that improves on what Google and Microsoft have been doing for years. Instead, they see a significant shift in policy from the company that said "what happens on your iPhone stays on your iPhone." https://t.co/fud78Wn4pF

— kif (@kifleswing) August 6, 2021

WhatsApp gives a strong no to client-side scanning. “It’s not how technology built in free countries works.” https://t.co/sA19g2Jrbw

— Kurt Opsahl (@kurtopsahl) August 6, 2021

Note that the memo doesn’t articulate what they claim people are actually ‘misunderstanding’. This response is exactly the kind of passive aggressive rationalization that I’d expect from an abusive bully that is convinced that he’s ‘really a good guy’.

— Steve Raikow (@infocyte) August 6, 2021

head of WhatsApp on Apple's moves yesterdayhttps://t.co/dVkw5Eqbdl

— rat king (@MikeIsaac) August 6, 2021

NCMEC executive director calls people worried about tech companies scanning every photo on your phone "the screeching voices of the minority", in a note an apple VP calls "incredibly motivating"https://t.co/0hUS5AZRl9

— Ridley @ a safe distance (@11rcombs) August 6, 2021

Oh cool, so the counterargument to people's concerns about Apple devices policing photos using a cryptographic Rube Goldberg machine is... an ad hominem https://t.co/DBzJ92V5Ku

— Tony “Abolish ICE” Arcieri ? (@bascule) August 6, 2021

The call to “think of the children” creates unassailable cover for expanding censorship and surveillance. What moral person doesn’t want to do everything possible to stop the distribution of CSAM? https://t.co/LRsgxtOg7I

— Annemarie Bridy (@AnnemarieBridy) August 6, 2021

The fact that internal memos about content scanning are leaking from Apple does not say good things about support for those policies internally. https://t.co/mibWaZCfOS

— Matthew Green (@matthew_d_green) August 6, 2021

In an internal memo, Apple employees and teams that worked on the child-safety features have acknowledged the misunderstandings and have said they will continue to the explain the details of the feature. https://t.co/3zVQNkAbbY

— Apple Tomorrow (@Apple_Tomorrow) August 6, 2021

Interesting WhatsApp declaration. Cathcart links to a blogpost (CEI = child exploitation imagery, same thing as CSAM = child sexual abuse material). Note the number of accounts zapped *per month*. https://t.co/NCzxBN4CIG pic.twitter.com/UIOLvdZ1Ja

— Charles Arthur (@charlesarthur) August 6, 2021

What will happen when spyware companies find a way to exploit this software? Recent reporting showed the cost of vulnerabilities in iOS software as is. What happens if someone figures out how to exploit this new system?

— Will Cathcart (@wcathcart) August 6, 2021

The danger with using the "Think of the children!" argument to let Apple away with their invasion of your devices and data is it doesn't take a leap to see how once the precedent is established it can trivially be misused. https://t.co/8K9p8w6FOp

— Will Hamill (@willhamill) August 6, 2021

Instead of focusing on making it easy for people to report content that's shared with them, Apple has built software that can scan all the private photos on your phone -- even photos you haven't shared with anyone. That's not privacy.

— Will Cathcart (@wcathcart) August 6, 2021

the head of WhatsApp has concerns about Apple’s image scanning approach. “Apple has long needed to do more to fight CSAM, but the approach they are taking introduces something very concerning into the world.” https://t.co/v5TmeZ5gJp

— Tom Warren (@tomwarren) August 6, 2021

How long has Facebook waited for a chance like this to dunk on Apple for privacy violations https://t.co/XqnXtSgnEL

— Luke Metro (@luke_metro) August 6, 2021

100% agree with Will Cathcart. Wrong approach from Apple to a VERY sensitive problem. https://t.co/R9s9Htmxwp

— Eduardo Arcos (@earcos) August 6, 2021

This is an Apple built and operated surveillance system that could very easily be used to scan private content for anything they or a government decides it wants to control. Countries where iPhones are sold will have different definitions on what is acceptable.

— Will Cathcart (@wcathcart) August 6, 2021

It’s not a good look for Apple when the head of WhatsApp even thinks it’s a bad move ? https://t.co/sPEihhOmvO

— Saran (@SaranByte) August 6, 2021

Can this scanning software running on your phone be error proof? Researchers have not been allowed to find out. Why not? How will we know how often mistakes are violating people’s privacy?

— Will Cathcart (@wcathcart) August 6, 2021

Even the head of Facebook's WhatsApp thinks what Apple is proposing is a setback for privacy.

— Michael (@OmanReagan) August 6, 2021

Think about that for a minute. https://t.co/UV2zWqn4lt

they’re loving this

— rat king (@MikeIsaac) August 6, 2021

Will this system be used in China? What content will they consider illegal there and how will we ever know? How will they manage requests from governments all around the world to add other types of content to the list for scanning?

— Will Cathcart (@wcathcart) August 6, 2021

Hey @Apple - when @Facebook is legitimately able to claim the moral high ground against you, you've probably screwed up bad https://t.co/j4klipfVwk

— David Grunwald (@st_eppel) August 6, 2021

Apple has long needed to do more to fight CSAM, but the approach they are taking introduces something very concerning into the world.

— Will Cathcart (@wcathcart) August 6, 2021

We’ve had personal computers for decades and there has never been a mandate to scan the private content of all desktops, laptops or phones globally for unlawful content. It’s not how technology built in free countries works.

— Will Cathcart (@wcathcart) August 6, 2021

Definitely no love loss between #Apple & #Facebook ?$aapl $fb https://t.co/hUu0jCoXhC

— Susan Li (@SusanLiTV) August 6, 2021

Technically he is not wrong.

— tihmstar (@tihmstar) August 6, 2021

Yet hearing Whatsapp/Facebook talking about privacy is somewhat ironic afterall.

Is really annoying how jailbreaking is still absolutely neccessary to have usable devices. First it was about usability, now it is about security/privacy… https://t.co/0SHCxsLnTJ

Even WhatsApp cares about your privacy more than apple ? https://t.co/MAHZQZJIHi

— Highest (@TechHighest) August 6, 2021

Update: These initial expressions of hesitance from Whatsapp are at least a small sign that there is some fight left in corporate e2e providers to reject mandates of on-device mass surveillance. Some reasons for optimism there.https://t.co/I0Qahy7SYn

— Sarah Jamie Lewis (@SarahJamieLewis) August 6, 2021

It’s not a good look for Apple when the head of WhatsApp even thinks it’s a bad move ? https://t.co/sPEihhOmvO

— Saran (@SaranByte) August 6, 2021

Definitely no love loss between #Apple & #Facebook ?$aapl $fb https://t.co/hUu0jCoXhC

— Susan Li (@SusanLiTV) August 6, 2021

Even the head of Facebook's WhatsApp thinks what Apple is proposing is a setback for privacy.

— Michael (@OmanReagan) August 6, 2021

Think about that for a minute. https://t.co/UV2zWqn4lt

Apple’s rake-stepping yesterday is now enabling semi-credible privacy dunks from Facebook ? https://t.co/7bnYZIKYg3

— Blake E. Reid (@blakereid) August 6, 2021

Even Facebook thinks Apple has gone too far ? https://t.co/pJR1weoPdP

— Joshua Swingle (@JoshuaSwingle) August 6, 2021

WhatsApp attacks Apple’s child safety ‘surveillance’ despite government acclaim https://t.co/Sp3tfpJm0I

— Financial Times (@FT) August 6, 2021

Apple's privacy reputation is at risk with changes it announced this week https://t.co/oOaNp5TzMq

— CNBC (@CNBC) August 6, 2021

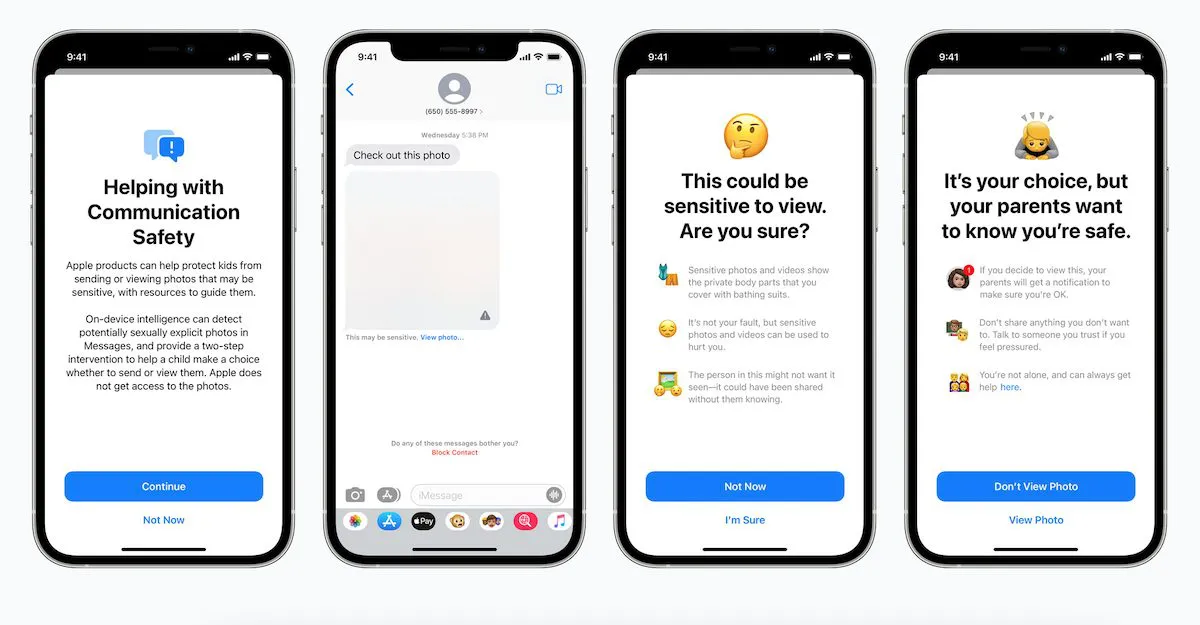

Apple is aiming to prevent child sexual abuse with two new tools:

— Jack Nicas (@jacknicas) August 5, 2021

1. A pretty complex system to spot images of child sexual abuse uploaded to iCloud.

2. A feature that flags to parents when their children send or receive nude photos in texts.https://t.co/3SJNdPLuWD

am I reading this wrong or are they planning on comparing all photos to a massive database of child sexual exploitation materials

— ?Doctrix Snow (@MistressSnowPhD) August 6, 2021

a database in which I’d imagine CSEM survivors would prefer not to be included

but I doubt they were asked for consent anyway, so who knows https://t.co/oUTpygQcNq

We can all agree we must do everything we can to stop child abuse.

— Santiago Mayer (@santiagomayer_) August 6, 2021

…But having Apple scan all my pictures and potentially send them to human reviewers is not something I’m comfortable with. https://t.co/7Qdh7HsIu2

Wait, initial hash database comes from the authorities in any given country and isn’t verified beforehand? Apple’s only way to find out is w/ manual review after a user has been flagged? Using same under-paid & overworked content moderation contractors that could be infiltrated? https://t.co/ab0wbpc5Ir

— Steve Troughton-Smith (@stroughtonsmith) August 6, 2021

This sucks. Any student of the FBI knows what this is: violating civil rights in the name of protecting the victims of revolting edge cases. The next step is always, ALWAYS, citing those violations as precedent. https://t.co/ehnM4XEBEm

— ? (@samthielman) August 6, 2021

Wonder what 2019's Apple would think of today's Apple. pic.twitter.com/BbgY6zjxrV

— Vlad Savov (@vladsavov) August 5, 2021

If the new Apple photo scanning stuff was looking at any and all photos stored on your device, I think there’d be somewhat of an ethical debate to be had. But it’s just applied to iCloud Photos, which makes it no different than any other major web service or social network.

— Benjamin Mayo (@bzamayo) August 5, 2021

All of this sounds fantastic. I do feel bad that once an iCloud user exceeds the CSAM threshold it’ll go to manual review for verification. I can’t think of a worse job that having to view child sexual abuse material all day long. ? https://t.co/do3kQ5yel2

— Quinn Nelson (@SnazzyQ) August 5, 2021

What are your thoughts on Apple's new Child Safety protections? It checks hashes of images going to iCloud Photo Library, on-device, against known Child Sexual Abuse Material, and if a high enough number of matches are found, prompts Apple to initiate action #poll

— Rene Ritchie (@reneritchie) August 6, 2021

Previous discussion on possibilities of false-positive detections when scanning for CSAM. Apple's approach is even more guarded and requires a threshold to be met to eliminate individual detections. https://t.co/uQIYZq9Fhi

— SoS (@SwiftOnSecurity) August 5, 2021

One interesting outcome of this move by Apple is that it addresses one major concern from policy makers.

— Miguel “metaverse” de Icaza (@migueldeicaza) August 5, 2021

Not doing anything could lead to end to end crypto being weakened or prohibited across the board by US/EU.

This takes away a major source of pressure on tech vendors.

After a long & forceful push from me & @LindseyGrahamSC, Apple’s plans to combat child sexual exploitation are a welcome, innovative, & bold step. This shows that we can both protect children & our fundamental privacy rights. https://t.co/c8Hp7jQY3a

— Richard Blumenthal (@SenBlumenthal) August 5, 2021

Apple has “driven a wedge between privacy and cryptography experts who see its work as an innovative new solution and those who see it as a dangerous capitulation to government surveillance.” https://t.co/aE8oZXiTUa

— Lauren Goode (@LaurenGoode) August 5, 2021

Also worth saying: child safety advocates have been pushing Apple to do this for a very long time. I talked with Thorn’s @juliecordua, who told me she was heartened by today’s moveshttps://t.co/0N0qTlWn1I pic.twitter.com/dudC94NP76

— Casey Newton (@CaseyNewton) August 6, 2021

I tried to sort through my feelings about Apple’s child safety announcements today. The risk of the slippery slope is real — but there are also real harms taking place on iCloud today, and it’s good that Apple has chosen to pay attention to them https://t.co/0N0qTlWn1I pic.twitter.com/puF0sMVL3a

— Casey Newton (@CaseyNewton) August 6, 2021

China forced Uyghurs to install an app that scanned for illegal files. Many ended up in concentration camps.

— Ryan Tate (@ryantate) August 5, 2021

Apple today forces this spying on Americans, declaring, “If you’re storing [illegal] material, yes, this is bad for you.”https://t.co/7AocVQsIClhttps://t.co/owSS9Boo0i

Any automated CSAM matching system introduces an exploitable vulnerability to completely ruin someone's life.

— Hector Martin ?? (@marcan42) August 6, 2021

This is a really useful discussion of Apple's plans to scan images to detect CSAM. It's smart and elegant, but the concern is clearly mission creep. https://t.co/DfVhZ1kS3z

— Kate Bevan (@katebevan) August 5, 2021

Apple unveils changes to iPhone designed to notify parents of child sexual abuse. But some privacy watchdogs are concerned about the implications. https://t.co/PBQD1jgqWR

— NYTimes Tech (@nytimestech) August 6, 2021

personally, I think this sort of "if you have nothing to hide, you have nothing to fear" attitude should disqualify someone from being a "privacy chief" (from: https://t.co/NTwVpqKg6d) pic.twitter.com/5Ft1RbsTie

— Gordon Mohr ꧁??꧂ (@gojomo) August 5, 2021

re: today's Apple news I'm trying to think of another product that will, essentially, automatically report you to the police if it determines you've broken the law *based entirely on private conduct*—Can't come up with other examples yet.

— Sam Biddle (@samfbiddle) August 6, 2021

So @apple wont unlock a mass shooters Iphone citing privacy and the slippery slope but they are happy to scan everyones images for potential child porn. Kinda like the double standard for privacy they have in China. What am I missing?

— Danny Lopez (@Danny76Lopez) August 6, 2021

the idea that a colossal tech company might suddenly decide to pursue an altruistic agenda of abuse prevention is, no joke, the most laughably barefaced complete fucking fairytale bullshit any of us will ever hear in our lives https://t.co/qqI0Wy5wDx

— Joe Wintergreen (@joewintergreen) August 6, 2021

?? Apple says to "protect children," they're updating every iPhone to continuously compare your photos and cloud storage against a secret blacklist. If it finds a hit, they call the cops.

— Edward Snowden (@Snowden) August 6, 2021

iOS will also tell your parents if you view a nude in iMessage.https://t.co/VZCTsrVnnc

iCloud and iMessage will be scanning your photos under the guise of "protecting children", ending their claim to end to end encryption. A tried and true strategy: incite a moral panic and leverage outrage to erode user privacy. https://t.co/DsgewLtxV0

— koush (@koush) August 6, 2021

Companies doing CSAM (used to be called 'CP') scanning is not new, they don't want to be pedo hosting dumping grounds, but the incredible nuances in pushing this technology stack to the client-side is where Apple's change is what's being discussed. I'm still watching discourse. https://t.co/gGZ8PqI3cI

— SoS (@SwiftOnSecurity) August 5, 2021

Good article on how this becomes a problem, even when not intentionally targeted. https://t.co/myXnCHPQzc

— Hector Martin ?? (@marcan42) August 6, 2021

some thoughts on this:

— ? Sophie Ladder ? (@sophieladder) August 6, 2021

- they are only checking the images against known child sexual abuse material (CSAM). this makes me less concerned than if they were using AI (recall nudity AIs flagging pictures of sand dunes) which could incorrectly identify unrelated media https://t.co/NI1FI6kVWy

There is something quite theological in Apple’s idea that doing something in your devices is private and doing exactly the same thing in the cloud is not.

— Benedict Evans (@benedictevans) August 5, 2021

This is the worst idea in Apple history, and I don't say that lightly.

— Brianna Wu (@BriannaWu) August 5, 2021

It destroys their credibility on privacy. It will be abused by governments. It will get gay children killed and disowned. This is the worst idea ever. https://t.co/M2EIn2jUK2

Thank you @tim_cook for taking action to help catch sexual predators & prevent the horrific abuse of children—now it’s time for others in Big Tech to step up & for Congress to pass the EARN IT Act.

— Richard Blumenthal (@SenBlumenthal) August 5, 2021

Let's be real tho. This is another move towards fascist surveillance state done in the name of "protecting the children". Most things done w the intention of "doing good" in this country are actually terrible https://t.co/kFebkbIpcj

— ? Mistress Lienne ? (@mistresslienne) August 6, 2021

Apple does normal journalism practice?! How incredibly scandalous!!! How dare they! https://t.co/E2ewOL2MZh

— Sami Fathi (@SamiFathi_) August 5, 2021

Seems like Apple's idea of doing iCloud abuse detection with this partially-on-device check only makes sense in two scenarios: 1) Apple is going to expand it to non-iCloud data stored on your devices or 2) Apple is going to finally E2E encrypt iCloud? https://t.co/zQeVbAekOo

— Andy Greenberg (@a_greenberg) August 5, 2021

A better way of phrasing it: Apple likely chose to implement this system on their own terms, rather than be forced to implement a system imposed on them by legislators that might have had worst outcomes.

— Miguel “metaverse” de Icaza (@migueldeicaza) August 6, 2021

Apple: Stink different https://t.co/6Qtoly4094

— Katie Moussouris (she/her) is fully vaccinated (@k8em0) August 6, 2021

Yeah can’t stress enough how much I’m really not happy with a robot assessing my nudes. I’m going to be mad af either way, too, because if it shows them to the cops that’s a huge breach of privacy, and if it doesn’t show them to the cops it’s telling me I look old, rude. https://t.co/Zp8AJensel

— Al (@SweatieAngle) August 6, 2021

This is the opening salvo in the assault to end what shred of autonomy and privacy from corporations and the state that we have left https://t.co/iwwDHCsBsx

— Unapologetic on YT (@unapologeticYT) August 6, 2021

Couldn’t someone just hack this and then digitally plant images on the phones of dissenters? Quite powerful…Seems like an awful idea to me https://t.co/6sqZdJ4MEr

— Critical Jake Theory (@jakecoco) August 6, 2021

Louder, for the people in the back: it’s impossible to build a client-side scanning system that can only be used for sexually explicit images sent or received by children. https://t.co/vRHRTxH0I8

— Eva (@evacide) August 5, 2021

It’s really cute that Apple keeps defending the system like this, as if once an authoritarian government passes the law to require device manufacturers to scan for other stuff, the system itself will prevent that from happening. https://t.co/DArI7R3ov5

— Khaos Tian (@KhaosT) August 6, 2021

Look, I can understand disagreement towards this action but one thing needs to be made clear: Apple isn’t just scanning your phone for images of your tiny pp. They’re only looking for cryptographic hashes of child porn that’s already known or in circulation provided to them.

— Quinn Nelson (@SnazzyQ) August 5, 2021

Apple thinks photo scanning is non-negotiable — that for legal and PR reasons, you can't be a major consumer tech company and not scan users' photos — so the only way to encrypt photos on-device was to develop & implement client-side scanning.

— Peter Sterne? (@petersterne) August 5, 2021

I hope they're wrong, but idk

Just to state: Apple's scanning does not detect photos of child abuse. It detects a list of known banned images added to a database, which are initially child abuse imagery found circulating elsewhere. What images are added over time is arbitrary. It doesn't know what a child is.

— SoS (@SwiftOnSecurity) August 5, 2021

This @EFF write-up explains why this backdoor is a disaster:

— Feross (@feross) August 5, 2021

"To say that we are disappointed by Apple’s plans is an understatement."

"It is a shocking about-face for users who have relied on the company’s leadership in privacy and security"https://t.co/TL3Fdnq9yV

Ah yes, the Apple that infamously and repeatedly has bent over backwards to give China deep access to device and cloud data, we're supposed to trust their spyware because lawyers got involved. Apple is not a privacy company. https://t.co/06842ueBrM

— Steve Streza ?️? (@SteveStreza) August 6, 2021

This is clever on Apple's part because if you criticise this you look like a pedo https://t.co/n2KKH584dO

— Irrational Chad (@IrrationalChad) August 6, 2021

Apple is setting a dangerous precedent by building a backdoor to surveillance.

— Minh Ngo (@minhtngo) August 6, 2021

Intent aside, if the technology can be abused by governments, law enforcement and other private actors, it will be. https://t.co/wFklBjo7pZ

https://t.co/asW0lasTRz

— Middlespace Bnuuy ? (@heyitsjaq) August 6, 2021

Soooo, while I am not nervous about having anything, this is still a very alarming step towards other companies and govt sifting thru ur shit.

Lmao i wonder if ppl with loli and stuff are gunna get flagged??? a lot of ppl would say that stuff is CSAM.

I can't see this being abused at all.https://t.co/DnsK8km7Ye

— Don Nantwich (@DonNantwich) August 6, 2021

Apple to scan iPhones for child sex abuse images - BBC News https://t.co/Qx5L8P0kS3

— DiamondLynne (@DiamondLynne1) August 6, 2021

Important to take further steps to fight child sexual abuse. I will discuss this issue further during my visit to Washington DC at the end of this month. https://t.co/5HWdLbv60D@MissingKids @SecMayorkas @Europol @EU2021SI @Childmanifesto @MissingChildEU @EUintheUS @EUAmbUS

— Ylva Johansson (@YlvaJohansson) August 6, 2021

Delighted to see @Apple taking meaningful action to tackle child sexual abuse, and adopting one of the ideas set out by my Commission this year: https://t.co/07aWEXZbkM

— Sajid Javid (@sajidjavid) August 6, 2021

Time for others - especially @Facebook - to follow their example.https://t.co/7bwGzXNPKI

#Apple introduces a new feature in #iOS, iPadOS, watchOS and #macOS that automatically scans data on all device for child abuse content.https://t.co/D6F3rgUbEu

— Anonymous?? ☕? (@YourAnonRiots) August 6, 2021

However, #cybersecurity & #privacy experts are raising concerns that the project could enable mass surveillance.

"Apple introduces a new feature in #iOS, iPadOS, watchOS and #macOS that automatically scans data on all device for child abuse content.

— ❤?? Trip Elix ? ?❤ (@trip_elix) August 6, 2021

Read: https://t.co/Eew5ayqp8T

However, #cybersecurity & #privacy experts are raising concerns that the project could enable mass surveillan…

.png)