It's his job to defend Facebook, he gets millions to do so. Would expect nothing else really. https://t.co/QDlPAxKxn4

— Paul Sawers (@psawers) March 31, 2021

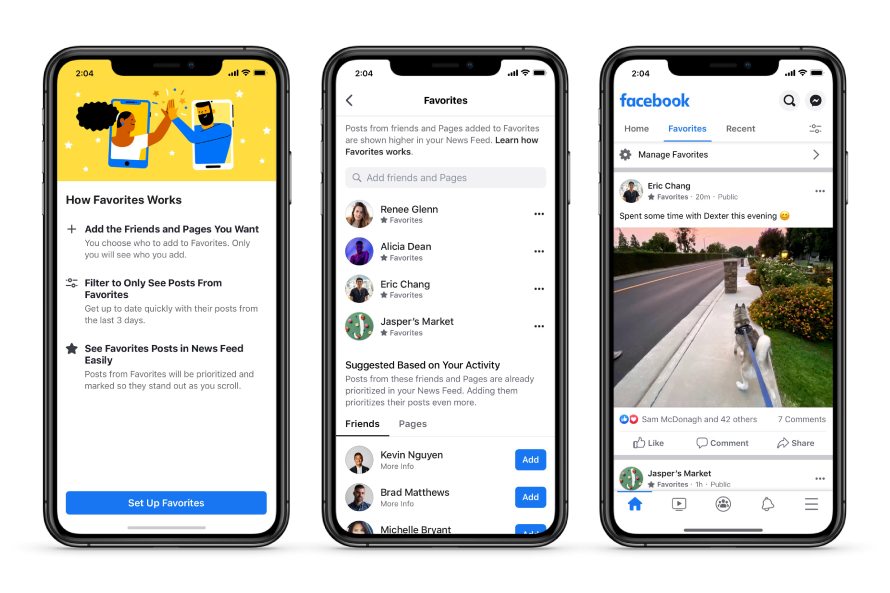

And there is the 6am ET press embargo lift on a narrative Facebook is providing users more control narrative - by making the binary algorithm on/off switch more apparent. 1 of 3 https://t.co/Mrh4pNKIC2 pic.twitter.com/znTthwi5mF

— Jason Kint (@jason_kint) March 31, 2021

my meager little choices vs. the news feed designed and constantly fine-tuned by one of the world's biggest companies to keep me engaged https://t.co/9Tm1V46ZAV

— Jacob Silverman (@SilvermanJacob) March 31, 2021

oh, and this is what the options will look like. It's basically like @Twitter's option to limit who can reply to your tweets https://t.co/ayceC6Ixxe pic.twitter.com/Y6ajRG9280

— Dave Earley (@earleyedition) March 31, 2021

People are only going to feel more comfortable with the algorithms behind News Feed if they have more visibility into how they work and more control over what they see. So we’re rolling out new tools to help people better understand and control what they see in their News Feeds.

— Nick Clegg (@nick_clegg) March 31, 2021

Nick Clegg lives in Silicon Valley but has dropped his new 5,000-word opus in the middle of his night to ride the waking US news cycle. So while the west coast sleeps, allow me to kick things off by saying this attempt to manipulate the media is all too transparently obvious https://t.co/aICMNVaYbo

— Carole Cadwalladr (@carolecadwalla) March 31, 2021

Nick Clegg has published a very long Medium post about why Facebook is, in fact, really great and not a threat to democracy. His argument is flawed! First up, he blames humanity, not Facebook, for making Facebook a polarising hellhole. Nice try, Nick! https://t.co/yXJANKNWN3

— James Temperton (@jtemperton) March 31, 2021

Woahhhhhh this is moderation solution news orgs have asked for for years!! Hot off the press - Facebook now lets users and pages turn off comments on their posts - seeya trolls! https://t.co/EfZt0N3dgU https://t.co/EfZt0N3dgU

— ? Flip Prior ? (@FlipPrior) March 31, 2021

Yes, people post the content. But the way Facebook builds system incentivizes certain types of posts, and the algorithms sort them in a very particular way.

— Alex Kantrowitz (@Kantrowitz) March 31, 2021

Facebook knows well the behavior it's asking for and encouraging.https://t.co/1bMLK2xZob

Facebook defends itself, on Medium: https://t.co/Fmg06RzXJb

— Peter Kafka (@pkafka) March 31, 2021

Hey Andy,

— Carole Cadwalladr (@carolecadwalla) March 31, 2021

Fixed this for you:

.@nick_clegg's essay is worth a read because he's my boss

ur welcome https://t.co/zNrVkuQG5c

This is a fantastic and long-awaited development for Facebook Page owners - although risks will still remain https://t.co/3iUYEkviVB

— Quiip (@Quiip) March 31, 2021

Facebook's Nick Clegg wrote a lengthy defense of surveillance advertising & FB's algorithms:https://t.co/td0VA0i4wc

— Jesse Lehrich (@JesseLehrich) March 31, 2021

it's wildly disingenuous & littered with strawman attacks, contradictions, & outright lies.

THREAD...

This piece is lengthy, but the questions surrounding technology’s power – and the companies behind it – are complex and require thoughtful consideration.

— Nick Clegg (@nick_clegg) March 31, 2021

Facebook's big defense of charges of algorithm-driven polarization and amplification of extremist content is essentially "well you clicked on that shit" https://t.co/2JXij0I3RT pic.twitter.com/kv8SR3uFwW

— James Vincent (@jjvincent) March 31, 2021

Tangent: Between Jay Carney and Nick Clegg, this week has been a reminder of how many 2010s centrist politicians have ended up at big tech companies. https://t.co/rt6OJrCl3l

— Danny Page (@DannyPage) March 31, 2021

Nick Clegg is saying here that radicalisation is the fault of users https://t.co/Z6psJW7noG

— Luke Bailey (@imbadatlife) March 31, 2021

Facebook’s making some product changes to give us more control of our Newsfeed and more transparency into what we see, including making it much easier to get to the chronological timeline https://t.co/EbU5O4HWQc

— Joanna Stern (@JoannaStern) March 31, 2021

Facebook just wants to offload responsibility while not actually addressing its core problems https://t.co/URnoCraV9Z

— Darrell Etherington (@etherington) March 31, 2021

Having spent the evening dealing with harmful transphobic and racist comments on our Facebook page, this will be a welcome feature https://t.co/y9DvnX0kRJ

— Adam Holmes (@AdamHolmes010) March 31, 2021

This piece is long, to make it look like Facebook is taking criticism seriously, but the bottom line is the same as it has always been: FB uses magical algorithms to give you what you want, so if you see bad/wrong things it's because you wanted to see them https://t.co/FxJntz3SyT

— Mathew Ingram (@mathewi) March 31, 2021

This is part of a larger pushback campaign re: polarization underway at FB. We revealed the internal "playbook" that was shared with employees recently. FB wants to counter accusations that its products are harmful to society. https://t.co/WGvFjSDC2y

— Craig Silverman (@CraigSilverman) March 31, 2021

Nick Clegg's Medium opus on polarization is classic content strategy. It's the touchstone FB will cite in testimony, comms etc. The goal is to establish it as a definitive post on par with independent reporting. Its length is a proxy for comprehensiveness and credibility.

— Craig Silverman (@CraigSilverman) March 31, 2021

Facebook's got Nick Clegg to post another eloquently worded mini-essay

— ? Matt Navarra (@MattNavarra) March 31, 2021

I'm starting to wonder what else he actually does for Facebook https://t.co/nRRcCq4s0Z

hey nick FYI you seem to have forgotten the immense number of apps and websites that facebook has embedded hidden pixel trackers and tracking SDKs in which means it’s not just ‘primarily’ what you do on facebook, it’s what the firm watches you do whenever you are online. pic.twitter.com/7JdfGTBPtq

— Michael Veale (@mikarv) March 31, 2021

(That follows a PR playbook developed by Facebook executives, and leaked to @RMac18 and @CraigSilvermanhttps://t.co/4ZlUQZs9rK )

— Janosch Delcker (@JanoschDelcker) March 31, 2021

.@nick_clegg as a former politician, I know you you know that there’s a very thin line between spin & misleading. This, here is misleading. I hope it’s rather an instance of not being provided with the right info because this misleading? It only serves to widen FB’s trust deficit https://t.co/hTlRK3PqSB

— Phumzile Van Damme (@zilevandamme) March 31, 2021

We should applaud the slow wind back of Web 2.0, but our work is not finished until everything is, at most, a static HTML page again (dial in BBSs delivering niche plaintext files preferable) https://t.co/xfXv5Mzmbm

— Ben (@benv_w) March 31, 2021

In other words: Facebook is feeling the heat.

— Janosch Delcker (@JanoschDelcker) March 31, 2021

And its new PR strategy seems to say: Show us the evidence that we’re *really* a reason for societies to become more Polarized, rather than just a symptom. pic.twitter.com/ZTmiri4GKZ

You can finally turn them off—a win for news media outlets who were in the ludicrous position of having to monitor a vast number of stories.

— Belinda Barnet (@manjusrii) March 31, 2021

This was so stupid and unfair https://t.co/M8PeddvrDw

Nick Clegg’s long and boring defence of Facebook essentially boils down to “it’s not our fault, it’s yours”. In particular, he blames legacy media, while totally ignoring the fact that mass media has totally transformed in order to please FB’s algorithm. https://t.co/iyAz4Mu25l

— Oli Franklin-Wallis (@olifranklin) March 31, 2021

A Facebook executive's frame: Don't blame Facebook, blame yourself https://t.co/xRqIvQTcan

— Josh Sternberg (@joshsternberg) March 31, 2021

Expect a LOT more of this from Facebook in the coming months. "Yes, this stuff IS complex, and DOES have socioeconomic implications - but you don't understand it, do you? So make sure you let us work with you to sort it out (or else)": https://t.co/RkTbEjqVkA

— Matt Muir (@Matt_Muir) March 31, 2021

Previously, Facebook’s line was (in my words): We don’t like it if our platform is used to accelerate political polarisation. And we’re doing our best to stop that.

— Janosch Delcker (@JanoschDelcker) March 31, 2021

Clegg’s piece strikes a different tone.

He’s asking: Is Facebook really to blame? pic.twitter.com/kSJd5ZSzs0

OMG OMG OMG. This is huge, and will bring a great sense of relief to organisations who have had a defamation burden on them for social media comments made by other users. https://t.co/FJYrVqMRVe

— Amber Robinson (@missrobinson) March 31, 2021

Facebook head of policy & PR @nick_clegg responds to moral panic about the net, saying we do have agency. I agree.

— Jeff Jarvis (@jeffjarvis) March 31, 2021

But I hate this sentence: "Should governments set out what kinds of conversation citizens are allowed to participate in?" Don't even ask. https://t.co/Gg9B9rct2l

I talked with @nick_clegg about his Medium post, the evidence that social networks are polarizing, and what it would mean for Donald Trump to come back to Facebook. https://t.co/DBNKdF4GrI

— Casey Newton (@CaseyNewton) March 31, 2021

Some of this criticism is fair: companies like Facebook need to be frank about how the relationship between you and their ranking systems really works. And they need to give you more control.

— Nick Clegg (@nick_clegg) March 31, 2021

Here’s Nick Clegg’s full piece on this.

— Adrian Weckler (@adrianweckler) March 31, 2021

“People are portrayed as powerless victims, robbed of their free will. Humans have become the playthings of manipulative algorithmic systems. But is this really true? Have the machines really taken over?”https://t.co/8fSwF4V5rn

The shift in tone and approach is notable, as pointed out in this very good thread: https://t.co/jdDJUvBfNy

— Craig Silverman (@CraigSilverman) March 31, 2021

Nailed it. And we know of Parliamentary evidence Facebook tracks users across more than 8 million publishers. And from antitrust report that a majority of Facebook’s data on us comes from third parties (aka surveillance) and not when we actually choose to use Facebook services. https://t.co/xH7hc2DGIq

— Jason Kint (@jason_kint) March 31, 2021

Facebook can only *show* you hate and disinformation. They couldn’t do that if you weren’t on Facebook, so that’s on you. ? https://t.co/SBr7Cs6Rof

— what is proctoring if not surveillance persevering (@hypervisible) March 31, 2021

My issue with this analogy is that the decision making takes place in a context where trust is established -- you understand your partner and feel your partner understands you.

— Katie Collins (@katiecollins) March 31, 2021

Whereas users' relationships with FB today are shaped by historic distrust and lack of transparency https://t.co/789T1qlEU8 pic.twitter.com/BUc1jxWY6T

"Well, he would say that, wouldn't he?"

— Ed Bott (@edbott) March 31, 2021

I mean, how many millions does Facebook pay Nick Clegg to say exactly this sort of thing? https://t.co/P0vwUfY89j

My thoughts on @Facebook 'favorites' and @nick_clegg blog today. Rather that 'two to tango' it's more accurate to say Facebook is 'dancing in the dark' with your data. @FBoversight https://t.co/xFDfurdJlV pic.twitter.com/xB1zbARM0R

— Damian Collins (@DamianCollins) March 31, 2021

Extreme filter bubbles do seem rare for ppl on social media. And still lots of research to be done. But! FB's internal research shows groups recommendations and other systems push people to extremes and elevate polarizing content. Of course, this is not part of FB's messaging.

— Craig Silverman (@CraigSilverman) March 31, 2021

.@nick_clegg's essay is worth a read as it addresses some of the macro charges levied against Facebook, and social media more generally, of late. It acknowledges where critics have a point, where they simply miss the mark and how Facebook is charting a course forward. https://t.co/6fZhcbocAk

— Andy Stone (@andymstone) March 31, 2021

But the caricatures of social media's algorithms don't tell the full story: You are an active participant in the experience. The personalized “world” of your News Feed is shaped heavily by your choices and actions.

— Nick Clegg (@nick_clegg) March 31, 2021

It would be helpful if Facebook acknowledged that a large number of the changes it made to ranking posts happened because of outside pressure to change. https://t.co/ehtH9tMz9x

— Shira Ovide (@ShiraOvide) March 31, 2021

Nick Clegg (VP of Global Affairs, Facebook) writes on the interaction between algorithmic feeds and individual choice. In response to a recent Atlantic article about the product being a "Doomsday Machine" - worth a read.https://t.co/uAj8ZU7csq pic.twitter.com/nBsJ6zLw1W

— Andrew Chen (@andrewchen) March 31, 2021

for example about 43% of all Android apps contain a facebook tracker https://t.co/4FXLRQfKZI pic.twitter.com/brnu4WVoqa

— Michael Veale (@mikarv) March 31, 2021

"lengthy medium post" is not a great selling point

— Cecilia Kang 강 미선 (@ceciliakang) March 31, 2021

Announcing a bunch of new controls for Facebook users - including being able to choose who can comment on your public posts + a simple way to change your News Feed from algorithmically ranked to chronological: https://t.co/u4qqcjfp0t

— Alex Belardinelli (@abelardinelli) March 31, 2021

Don’t want #algorithms control your #Facebook news feed? The Feed Filter Bar offers now easy access to ‘Most Recent’, making it simpler to switch between an algorithmically-ranked news feed and a feed sorted chronologically with the newest posts first. https://t.co/zZJC7eYq2o

— Aura Salla (@AuraSalla) March 31, 2021

The debate over social media's role in society often centers on concerns that people are being manipulated by algorithms they can’t control. Some say the machines have already won. Read my take here: https://t.co/B1a7UbxEzZ

— Nick Clegg (@nick_clegg) March 31, 2021

Hi Andy. Did you ever complete the fact check on the New York Post story you banned from your platform? Curious to see those results. https://t.co/y1OCH5MFVn

— Stephen L. Miller (@redsteeze) March 31, 2021

It's striking that right after a section when Clegg emphasizes that FB downranks clickbait and sensational and false content in News Feed, he acknowledges that websites with sensational and spammy content disproportionally get their traffic from... Facebook. pic.twitter.com/4z0tAk8TeL

— Craig Silverman (@CraigSilverman) March 31, 2021

Facebook announces big changes to comments and your News Feed https://t.co/HxxgIDN4RR

— iMore (@iMore) March 31, 2021

Facebook users will have more control over what's posted to their profiles, but media companies, celebrities, and other high-profile pages that have struggled to moderate comments on Facebook posts are most likely to feel the impact of this change. https://t.co/qE4Bof4pMI

— Nieman Lab (@NiemanLab) March 31, 2021

#BREAKING NEWS: Facebook will allow every user, and Pages (media, politicians, brands, everyone) to turn off comments on their public posts

— Dave Earley (@earleyedition) March 31, 2021

https://t.co/ayceC6Ixxe

Facebook allowing news providers to turn off comments on posts is a big step towards detoxifying social media. There are a lot of important stories which are currently poisoned by horrendous comments almost as soon as they are shared. https://t.co/VkzNl6uH3c

— Ed McConnell (@EdMcConnellKM) March 31, 2021

Facebook will now let Page Admins turn off comments on their posts

— ? Matt Navarra (@MattNavarra) March 31, 2021

BUT

What will brands + publishers end up choosing most often?

- Engagement / Reach (Comments ON)

or

- Moderation (Comments OFF) https://t.co/JcvBFPxFtg pic.twitter.com/BwWrwWeuDs

Stop the presses. This is the biggest social media news in weeks. Game-changing.https://t.co/n2OksLfTzR

— Chris Martin (@ChrisMartin17) March 31, 2021

Facebook now lets users and pages turn off comments on their posts | Australian media | The Guardian https://t.co/7YEcqcFR3h

— lunamoth (@lunamoth) March 31, 2021

페이스북, 프로필/페이지에서 댓글 권한 설정 지원. ▲모든 사람 댓글 허용 ▲친구만 댓글 허용 ▲멘션한 프로필/페이지만 댓글 허용

트위터 댓글 정책과 비슷하게

.png)